Language Models are Changing Everything

This is a blog post about how the predictive text function on your phone will destroy the world. Or maybe not, but at the very least, it’ll revolutionize the ability of computers to understand and write text, perform tasks involving natural language, and open up some thorny questions about what separates us from the machines.

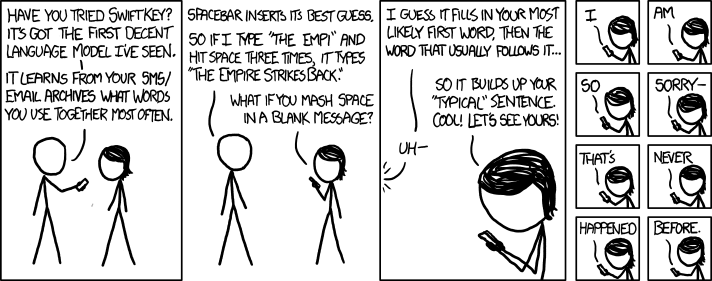

The technical name for what predictive text does is language modelling. The job of a language model is to predict likely words. The simplest language models might just know which English words are most common: “and”, “the”, “of”, “to”. But more complex language models might know which words are most common in the context of other words. For example, “of” is extremely common, but “the of” is extremely rare in English, and so a good language model shouldn’t predict that “of” is coming right after “the”.

A good language model needs to understand the real world

Language modelling, like all good games, is easy to get started with but very difficult to master. A language model that just looks at the single preceding word to decide which words to predict next is very easy to design and program, but the tradeoff is that it’s much less accurate than one that can consider the entire context of the document that’s being written. Which word is most likely to follow “President” with a capital P? If it’s a contemporary news story, the answer is probably “Trump”, but an older text could have anything from “Obama” to “Lincoln” to “de Gaulle”. In other words, to make a state-of-the-art language model, you need to understand not only the structure and syntax of English, you need to understand context, common sense, and historical facts.

Consider the following:

“After I fell in the water, my clothes were all ____”

“It was more expensive to travel by air, but it was worth it because it was so much _______”

“In 1870, the largest political party in the United States was the _________”

A good language model has to know that water makes you wet, that air travel is faster than traveling by car, and that the Republican party maintained its majority in the House of Representatives in the 1870 elections. These are not linguistic facts, but they are relevant facts because humans use language to talk about the real world, and so to predict what you’re going to say, a good language model has to understand the real world.

A human way of learning

Language models are of huge interest in the field of machine learning and AI right now. The reason is not solely that we’re interested in predicting words per se, although making a better predictive keyboard or text generator is interesting to some people. The real appeal, though, is that language models understand language, at least in some mechanical, statistical sense, and this knowledge can be transferred to other tasks. Currently, nearly everything you’d want to do in NLP right now — from detecting entities to classifying sentiment to linking individual articles into larger stories — are all tasks that benefit from knowledge transfer from a strong language model.

This rise of this kind of ‘transfer learning’ from language models like ULMFiT and BERT has massively advanced the state of the art in natural language processing (NLP). By reading millions or billions of words of text, language models like these gain an understanding of language that makes it much easier to then learn other NLP tasks with much better performance, much lower training time, and much less training data than was previously possible. In effect, rather than ‘starting from scratch’ when these models approach a new problem, they bring a battery of prior skills and knowledge to the table. This is a much more ‘human’ way of learning – we make use of our lifetime of education and experience in everything we do; we do not re-learn language every time we need to read a document. And now machines can do the same.

Two or three years ago, an ML engineer working on an NLP problem would start with a set of pre-trained word vectors at most. Now they start with BERT, a gargantuan neural network with a rich vocabulary and understanding of grammar and syntax, and merely fine-tune it on their problem of choice. Problems that would have required hundreds of thousands of training examples can now be solved with a tenth or a hundredth of that amount, and in much less time. The potential of deep learning has been obvious for almost a decade now, but its practical application has been limited by the need to painstakingly gather large volumes of training data. The cost and time involved in manually labelling data has usually been enough to put ML solutions out of reach for small projects, but that’s all changing. What new possibilities open up when instead of a million training examples, you only need a thousand that you could label in a single day?

You may be feeling a little cheated at this point, though. At the start of this article, I promised I’d tell you about how language models were going to destroy the world, and you could justifiably accuse me of failing to deliver at this point. New ways to train machine learning models are very cool if you work in NLP, but they’re not apocalyptic.

What does the machine know?

On that note, enter GPT-2. At the time of this writing, GPT-2 is OpenAI’s latest language model, and as far as I’m aware, is the strongest language model in existence currently. GPT-2 is sufficiently advanced that given a prompt, it can write paragraphs on a theme that are at least somewhat coherent. (You can try this with your phone’s predictive text too, but you’ll find it quickly falls into a loop or just writes nonsense after a few words as it’s a much weaker model). However, the authors of GPT-2 found that it could implicitly perform more complex linguistic tasks.

Let’s say what we really care about is summarizing documents. Rather than train a language model and then transfer its knowledge to a model for that task, the creators of GPT-2 just gave the language model the document to be summarized, wrote “tl;dr” at the end and then asked it what came next. It works because GPT-2 is a sufficiently strong language model that it understands that “tl;dr” is internet slang that precedes a short summary of the text above it, and so understands that what comes next is likely a brief summary. GPT-2 acquired that knowledge without specifically being asked to, just from learning to model language.

It can probably do a lot of other things too, if we can only think of the right prompts to elicit that implicit knowledge from it.

For example, OpenAI didn’t try sentiment classification with GPT-2, but I bet if you fed it a news article about a company, and then at the end wrote “So, in summary, is this good or bad news for [company]? The answer is…” and left it to continue writing, I think you’d find that it had a pretty good idea about sentiment too.

I said earlier that we’re interested in using language models to do things beyond just language modelling, but if we took a sufficiently expansive view of what language modelling means we’d realize that it already contains many of these tasks! Rather than fine-tuning a language model like BERT on a new task, why not just ask GPT-2 if it already knows how to do it?

Capturing the human experience

In fact, language modelling is such an all-encompassing thing that Alan Turing suggested that a good test of whether a machine was truly intelligent would be an ‘imitation game’ that came to be known as the Turing test. In this game, a human judge would speak (via some kind of text connection, like a messenger program) to two subjects, one a human and the other a machine. If the judge cannot determine which is which, or guesses incorrectly, the machine would have passed the test. This is just another language modelling task, but Turing argued that it contained all the elements of true, human-like intelligence! He understood that to model language sufficiently well requires a whole host of implicit faculties, knowledge and common sense, or your artificial author will very quickly start to sound very strange.

You might object that a mere statistical language model, even one as sophisticated as GPT-2, cannot really be ‘intelligent’ or human-like. This is fair—the Turing Test certainly doesn’t capture every aspect of human experience.

A language model has no ability to comprehend images or sounds, or control a body the way humans do. Perhaps a skillful human judge could distinguish a human from a robot by asking it about sensations or experiences that the robot doesn’t have – or perhaps the robot would learn from reading people’s descriptions of those experiences and give an entirely convincing account regardless. Could you argue that the robot then understands those things, even without direct experience?

Either way, I wouldn’t lean too heavily on the diversity of human experience as an objection, when we have machines that have incredible visual imaginations, that can paint scenes in a variety of styles, both realistic and abstract, or control their body to leap through challenging obstacle courses, can both understand and generate natural speech and play a Go move so innovative it confounds both the world champion and the commentator. In short, we may be running out of uniquely human things to feel special about, and sometimes it can seem like the only thing AI is missing is a grand meta-model that can synthesize all these pieces into a personified whole.

OpenAI felt that GPT-2 was too powerful to release publicly. In particular, its ability to generate paragraphs or even documents of coherent, human-sounding text on a theme was considered very dangerous in the era of fake news and political Twitter bots. Despite that concern, they nonetheless published a paper where they described the process of creating and training the model in detail, and in almost all technical respects it is very similar to the open-source models that preceded it ¹. The major issue preventing other AI engineers replicating or exceeding GPT-2 is not a deep technical secret – it’s just that they don’t have OpenAI’s training data.

But wait. I said earlier that training data for a language model consists simply of large blocks of English text. OpenAI put in significant effort to gather 40GB of high-quality text to train their model, but Parse.ly works with hundreds of publishers worldwide to help them track attention over posts and articles containing far, far more text than that. 40GB would be just a drop in the ocean for our systems. And if training data is the only thing you need…

Read back up. How sure are you that a human wrote all of this?